Beautiful Work Info About How To Measure The Sensitivity Of A Sensor

Understanding Sensor Sensitivity

1. What Exactly is Sensor Sensitivity?

Ever wondered how your smartphone knows to brighten the screen when you step outside? Or how your car can automatically adjust the headlights when it gets dark? The answer lies in sensors — and, more specifically, their sensitivity. Think of sensor sensitivity as its ability to detect and respond to even the tiniest changes in its environment. It's not about being easily offended (like some people we know!), but about accurately picking up on subtle signals. A highly sensitive sensor will react to even a small change, while a less sensitive one might need a much bigger shift to register anything. It's the difference between hearing a pin drop in a quiet room and trying to hear it at a rock concert. Big difference, right?

Sensitivity isn't a one-size-fits-all kind of thing. It depends entirely on what the sensor is designed to measure. A temperature sensor, for example, needs to be sensitive enough to detect slight variations in heat, whereas a pressure sensor might need to withstand significant forces without going haywire. So, before you even think about measuring sensitivity, you need to understand what type of sensor you're dealing with and what it's supposed to be doing. Ignoring this step is like trying to bake a cake without knowing the ingredients — it's probably not going to end well.

Why is this even important? Well, consider a medical sensor designed to detect a specific protein in blood. If it's not sensitive enough, it might miss early signs of a disease, potentially leading to delayed treatment. Or, imagine a smoke detector that only goes off when the room is already engulfed in flames — not exactly ideal, is it? Accurate sensitivity is crucial for reliability and can, quite literally, be a matter of life and death in some applications. It's definitely not something you want to gloss over.

So, in simple terms, sensor sensitivity is all about how well a sensor picks up on subtle changes. Understanding this concept is the first step in figuring out how to measure it, which is what we're going to dive into next. Prepare yourself; it's not rocket science, but there are a few important things to keep in mind. Think of it as learning to dance — a little awkward at first, but satisfying once you get the hang of it!

Home FUTEK Advanced Sensor Technology V2

Methods for Measuring Sensor Sensitivity

2. Different Approaches to the Sensitivity Measurement

Okay, so we know what sensitivity is. Now, how do we actually measure it? There are several different methods, and the best one depends on the type of sensor and what you're trying to achieve. One common approach involves applying a known input and observing the sensor's output. Think of it like poking a bear with a stick (figuratively, of course!) — you want to see how it reacts. If you poke it gently and it roars, it's pretty sensitive. If you have to whack it repeatedly before it notices, well, not so much.

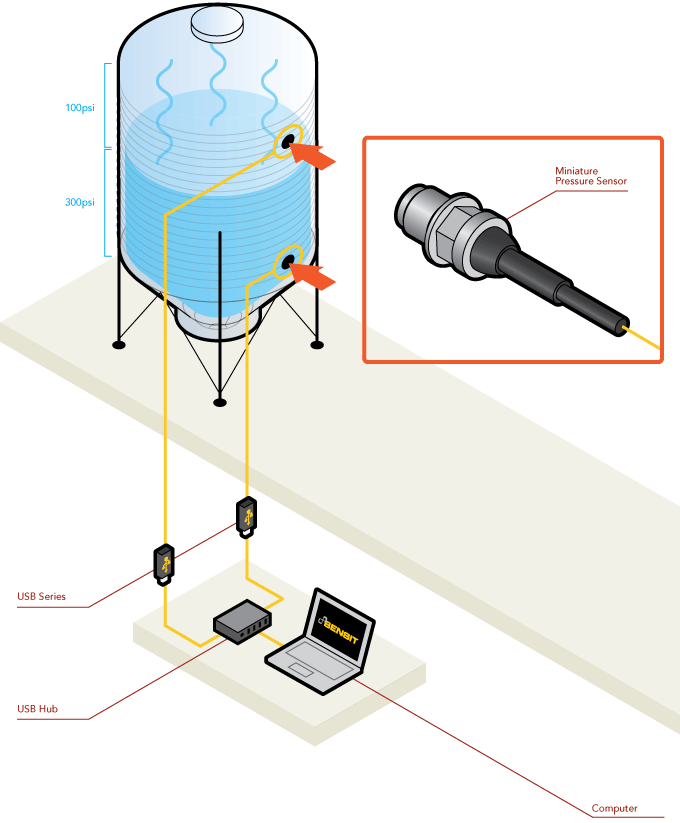

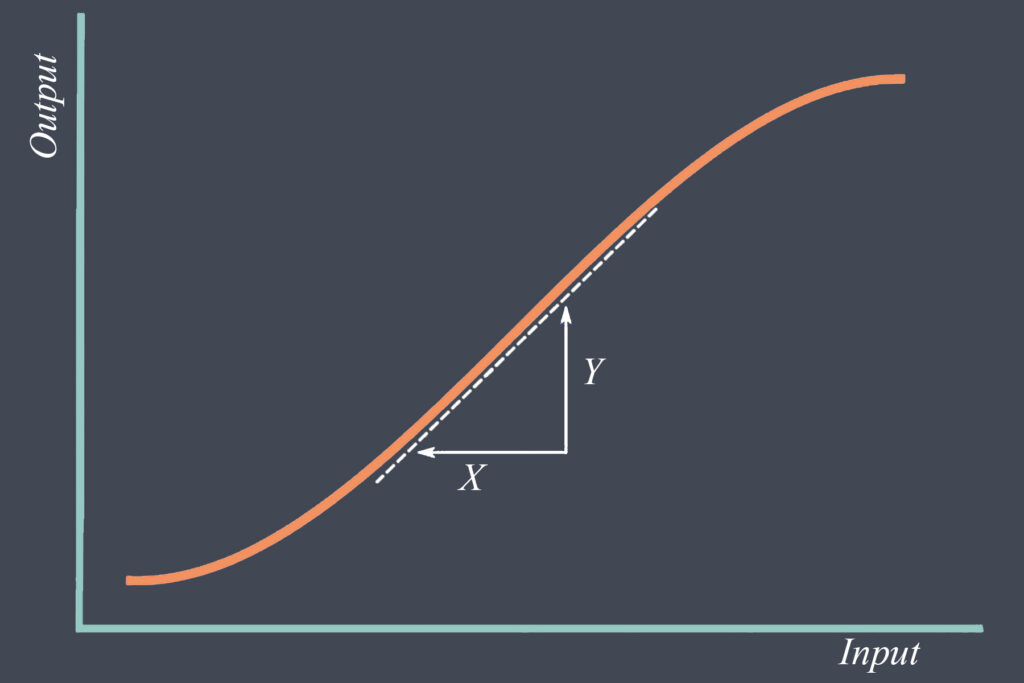

For example, with a light sensor, you might shine a light of known intensity on it and measure the resulting electrical signal. By gradually increasing the light intensity and plotting the output signal, you can create a calibration curve. This curve shows you how the sensor responds to different levels of light and allows you to determine its sensitivity. Similarly, for a pressure sensor, you could apply known pressures and measure the corresponding voltage or current output. The steeper the slope of the calibration curve, the more sensitive the sensor. Easy peasy, right? (Well, maybe not that easy, but you get the idea.)

Another approach involves using specialized equipment like signal generators and oscilloscopes. These tools allow you to precisely control the input signal and accurately measure the output signal. This is often used for more complex sensors or when you need a very precise measurement of sensitivity. Imagine using a laser scalpel instead of a butter knife — it gives you much finer control and more accurate results. You can also employ statistical methods to analyze the sensor's response and determine its sensitivity with a certain level of confidence. This is particularly useful when dealing with noisy signals or when you need to account for variations in the sensor's performance.

No matter which method you choose, it's crucial to control the environmental conditions. Temperature, humidity, and even electromagnetic interference can all affect the sensor's performance and throw off your measurements. Imagine trying to measure the weight of a feather in a hurricane — good luck with that! So, make sure you're working in a stable, controlled environment and using calibrated equipment to ensure the accuracy of your measurements.

Factors Affecting Sensor Sensitivity

3. External and Internal Factors

Even the most carefully designed experiment can be derailed by unforeseen circumstances. And when it comes to measuring sensor sensitivity, there are a number of factors that can influence your results. We briefly mentioned environmental factors like temperature and humidity. These can directly impact the sensor's internal components, leading to changes in its performance. For example, a resistor might change its resistance with temperature, affecting the overall output signal. Think of it like trying to run a marathon in the desert heat — your performance is definitely going to suffer!

Another important factor is the sensor's operating conditions. Operating a sensor outside of its specified range can not only damage it but also lead to inaccurate measurements. It's like trying to drive a car at 200 mph — it's probably not going to end well! Also, the age of the sensor can play a role. Over time, sensors can degrade, leading to a decrease in sensitivity. This is especially true for sensors that are exposed to harsh environments or used frequently. It's like an old pair of shoes — they might still work, but they're not as effective as they used to be.

Calibration is absolutely essential. Regularly calibrating your sensors against known standards ensures that they are providing accurate and reliable measurements. Think of it like tuning a musical instrument — if it's not properly tuned, it's going to sound terrible! Finally, the quality of the sensor itself can significantly impact its sensitivity. A poorly manufactured sensor might have inherent limitations that prevent it from achieving its full potential. It's like buying a cheap knock-off instead of a high-quality product — you get what you pay for.

Ultimately, understanding these factors and taking steps to mitigate their effects is crucial for obtaining accurate and meaningful measurements of sensor sensitivity. Don't just blindly trust the sensor's output — always be aware of the potential sources of error and take steps to minimize them. This attention to detail is what separates a good measurement from a questionable one.

Sensitivity Comparisons Of The Capacitive Pressure Sensors With (a

Calibrating for Accurate Sensitivity Measurement

4. Calibration Process

Think of calibration as giving your sensor a regular check-up to ensure it's performing at its best. It's the process of comparing the sensor's output to a known standard and adjusting it to match. Without calibration, you're essentially flying blind, hoping that your measurements are accurate. Spoiler alert: they probably aren't! The first step in calibration is to choose a suitable standard. This could be a precisely calibrated light source for a light sensor, a pressure gauge for a pressure sensor, or a temperature bath for a temperature sensor.

Next, you apply the standard to the sensor and measure its output. You then compare the measured output to the known value of the standard. If there's a discrepancy, you need to adjust the sensor's settings to eliminate the error. This might involve adjusting potentiometers, changing software parameters, or applying a correction factor to the readings. The specific procedure will depend on the type of sensor and its design. Once you've adjusted the sensor, you should repeat the measurement to verify that it's now accurate. You might need to iterate through this process several times to achieve the desired level of accuracy.

Calibration shouldn't be a one-time event. Sensors can drift over time due to various factors, so regular recalibration is necessary to maintain accuracy. The frequency of calibration will depend on the sensor's application, its operating environment, and the level of accuracy required. As a general rule, it's better to err on the side of caution and calibrate more frequently than you think you need to. Maintaining detailed records of your calibration process is also crucial. This will allow you to track the sensor's performance over time and identify any trends or issues that might require attention. Think of it like keeping a logbook for your car — it helps you stay on top of maintenance and identify potential problems before they become major headaches.

In conclusion, consistent calibration is the cornerstone of accurate sensitivity measurement. Without it, your results are questionable at best. So, embrace the calibration process, treat it with respect, and your sensors will reward you with reliable and meaningful data.

The Sensitivity Of Pressure Sensor With/without Light Exposure

Practical Applications

5. Real World Examples

So, we've talked about the theory and the methods, but where does all this actually matter in the real world? Well, sensor sensitivity plays a critical role in countless applications, from the mundane to the life-saving. Think about your smartphone again. The ambient light sensor adjusts the screen brightness based on the surrounding light levels. If the sensor wasn't sensitive enough, you'd be squinting at your screen in bright sunlight or blinded by it in a dark room. Not exactly ideal for binge-watching cat videos, right?

In the medical field, sensor sensitivity is even more crucial. Medical devices rely on highly sensitive sensors to monitor vital signs, detect diseases, and deliver medications. For example, glucose monitors used by diabetics need to accurately measure blood sugar levels with minimal error. A sensor that's not sensitive enough could lead to inaccurate readings, potentially resulting in dangerous health consequences. Similarly, in environmental monitoring, sensors are used to detect pollutants, measure air quality, and monitor weather patterns. These sensors need to be sensitive enough to detect even trace amounts of harmful substances or subtle changes in the environment.

Automotive safety systems also rely heavily on sensor sensitivity. Airbag deployment systems use sensors to detect collisions and deploy airbags in milliseconds. These sensors need to be incredibly sensitive and reliable to prevent injuries in an accident. Autonomous vehicles use a variety of sensors, including radar, lidar, and cameras, to perceive their surroundings. The sensitivity of these sensors is critical for navigating safely and avoiding obstacles. Imagine a self-driving car with a lousy sensor — you wouldn't want to be a pedestrian in its path!

From the everyday convenience of adjusting your screen brightness to the life-saving accuracy of medical devices, sensor sensitivity is a fundamental aspect of modern technology. Understanding how to measure and optimize it is essential for ensuring the reliability and performance of countless devices and systems that we rely on every day. So, the next time you use a sensor-equipped gadget, take a moment to appreciate the intricate engineering and precise calibration that makes it all possible. It's a small detail that makes a big difference.

+or.jpg)

HIGH SENSITIVITY SENSORS Ppt Download

Frequently Asked Questions (FAQs)

6. Your burning questions, answered!

Let's address some common questions about measuring sensor sensitivity. If you're still scratching your head about something, this section is for you!

Q: What's the difference between sensitivity and resolution?A: That's a great question! Sensitivity, as we've discussed, is how much the sensor output changes for a given change in the input. Resolution, on the other hand, is the smallest change in the input that the sensor can detect. Think of it like this: sensitivity is how loud the sensor shouts when something changes, while resolution is how small of a whisper it can still hear. A sensor can be very sensitive but have poor resolution, or vice-versa.

Q: How often should I calibrate my sensors?A: The frequency of calibration depends on several factors, including the sensor's type, application, operating environment, and required accuracy. As a general rule, calibrate more frequently for critical applications or harsh environments. Refer to the sensor's datasheet for specific recommendations, and when in doubt, err on the side of caution. Remember, it's better to be safe than sorry!

Q: Can I improve the sensitivity of a sensor after it's been manufactured?A: In some cases, yes, but it's often limited. Calibration can help optimize the sensor's performance and minimize errors. Signal processing techniques, such as filtering and amplification, can also improve the signal-to-noise ratio and effectively increase the sensor's sensitivity. However, the inherent limitations of the sensor's design and materials will ultimately limit how much you can improve its sensitivity. Sometimes, you just need a better sensor!